posted December 27 2021

Remembering Professor David I. Mostofsky

“We know that the brain’s primary sensorimotor cortex is laid out in a way that closely mirrors the overall shape of the body. You all probably remember the distorted ‘sensorimotor homunculus’ drawings from your introductory textbooks. But does anyone know how scientists got their first hints about this?” Professor Barbas loves tossing out these kinds of questions, which are fascinating to a renowned researcher with a vast knowledge of human neurology, but nearly impossible for first-year graduate students.

I tentatively raise my hand. “A Jacksonian march?” A Jacksonian march, also called a Jacksonian seizure, follows a characteristic pattern. It usually starts as a tremor or twitch in the fingers, which then proceeds up the hand, then the forearm, then the upper arm, and so on. It does this because the seizure activity is spreading in the same way along the neurons of the sensorimotor cortex. One of the earliest hints of the hidden structure of the brain.

“Yes, exactly!” Professor Barbas is genuinely excited that a student has finally gotten one of these right, and I am genuinely excited to be that student. But I didn’t learn about Jacksonian seizures from any of the many papers that I read for Professor Barbas’s class. I learned about them years earlier, in an undergraduate class taught by Professor David Mostofsky.

This fact exists in my memory almost entirely without context. I don’t remember why Mostofsky went off on a tangent about Jacksonian seizures, but I can still see him up in front of the class in his rumpled suit demonstrating what the seizure looks like. The image stuck with me. Just one of those little moments.

When I heard that Mostofsky died in late 2020, I realized that he had given me many such moments.

I first met Mostofsky when I enrolled in Behavioral Medicine, a course that covered a wide variety of fascinating topics. The power of the placebo effect, cultural differences in the reporting of disorders like epilepsy, the difficulties of getting patients to comply with life-saving treatments, on and on. My transcript informs me that I took this class in my final semester of college and earned an A. I still think about the class almost seventeen years later.

About a year after taking Behavioral Medicine, I was thinking of applying to grad school. It was around this time that I bumped into Mostofsky on the Green Line. I decided to email him to ask if he’d be willing to write me a letter of reference for my applications, and also for any advice he might be willing to give me about the whole grad school thing. Neither of my parents are academics, or even college graduates, so I was very much flying blind. He replied that it’d be easier to talk in person, could I come in next Sunday?

The Psychology Department building is locked on weekends. As I approached the building on a rainy Sunday morning in early Spring, there was Mostofsky, an umbrella in one hand, his other hand holding open the door.

His small office contained a decade-old computer, a University organizational chart dated 1990, a rather nice Galton board, and one of the most profound messes of papers I have ever seen. Every available surface was piled high with them. Mostofsky moved a small stack, revealing a chair, which I then sat in. Yes, he’d be happy to write me a letter of reference. No, I shouldn’t mention in my application that I’m undecided between experimental and clinical work. Admissions for clinical programs are much tougher than for experimental, so the committee might think I’m trying to get into the easier program and then transfer into the harder one. Think hard about it and pick one, he said.1

We talked for maybe half an hour, and after a pleasant goodbye, I was on my way. He made good on his promise to write me letters of reference, and to my enduring surprise, I ended up returning to Boston University for doctoral work in experimental psychology. My lab was just down the hall from his office.2 “Jonathan, you made it! That’s wonderful!” he said upon seeing me that Fall.

My second year of grad school required working as a teaching assistant. I signed up to assist Mostofsky’s Fall course, Experimental Design in Psychology. It fulfilled the statistical component of the undergraduate degree requirements—one of those courses that no one wants to take and even fewer want to teach. But Mostofsky genuinely loved teaching statistics. “Statistics is beautiful!” he once told me, emphatically. This was a man who amused himself in departmental meetings by doodling the experiment-wise error inflation curve.

A few weeks before the semester started, I mentioned to another professor that I’d be teaching with Mostofsky. “Oh he’s great. Remarkable, really. Did you know he’s a rabbi?” This professor was a noted eccentric and I thought to myself, Sure, a rabbi. She probably thinks all Jews are rabbis. How could a person possibly have time to be a practicing rabbi, researcher, and lecturer?

A few minutes before the first lecture of the semester, I said to him, “Someone told me that you were a rabbi, can you believe that?” He looked at me, winked, and said, “I don’t like to advertise.” It turns out that in addition—and parallel to—his academic career, he was indeed an ordained rabbi, currently practicing at a synagogue in nearby Brookline. To say that this put him in a unique position is something of an understatement. He had personal correspondences from the eminent behaviorist B.F. Skinner lamenting that such a talented scientist would devote so much time and energy to “superstitions”. I thought back to when Mostofsky had made time to give me advice about grad school, on a rainy Sunday. Saturdays would have been out of the question for a rabbi, of course.

Grad school rolled on. In my last year, Mostofsky suffered a mild heart attack. He was back teaching the next week. “What can I say? I’m mortal,” he said with a shrug. Shouldn’t he take a little more time off to recuperate? “Eh. They’ve got me on precautions, I’ll take it easy for a while. It’ll be fine.” And so it was.

As my graduate education came to a close, I found myself questioning whether I really wanted to stay in academia. I applied for industry jobs that could leverage the skills I’d developed on the road to a doctorate. And then one day Mostofsky said, “Aren’t you finishing soon? If you’re looking for a postdoc, a colleague of mine, Bruce Mehler—actually he used to be a graduate student in this department until he left for the private sector—he’s now at a lab at MIT that’s looking for postdocs. The Aging Lab, or something like that. They do very interesting work. Want me to put you in touch?” Fine, I’ll apply to this one postdoc, I thought.

I’d go on to spend an excellent five years at the MIT AgeLab as a postdoc and then research scientist, keeping in touch with Mostofsky throughout. I’d often email him links that I thought he’d find interesting, or ask him for a bit of statistical advice. After one such email, Bruce took me aside and said that old age had finally caught up to Mostofsky. He had suffered a sudden and severe cognitive decline, and it would probably be best not to email him anything that might confuse him. He passed away a couple of years later. What can I say? I’m mortal.

Mostofsky wasn’t a world famous scientist. But he was diligent and meticulous in his work, respected in his field, and passionate about his subjects. He was brilliant, but not flashy. He was deeply invested in the education of young minds and was a skilled, considerate mentor. He guided and encouraged me for more than a decade, and he did it so effortlessly that I’ve only really noticed with the clarity of hindsight. His influence on my own life has been nothing short of profound, it turns out. I am sure many others would say the same.

I write all this knowing that my own perspective captures but a small sliver of the actual man, the Rabbi Professor David I. Mostofsky. Yet I feel it’s important to enter it into the record, to let the world know that here was a righteous man who, in his own quiet way, did good works. He was many things to many people, as people always are. But to me, he will always be the kindly professor in the rumpled suit, holding open a locked door for an eager kid on a rainy Sunday morning.

A mensch worth remembering. Gone, but not forgotten.

-

While clinical psychology programs are among the most selective (especially a renowned one like Boston University’s), almost all doctoral programs are hard to get into, accepting perhaps a handful of new students each year out of several hundred applicants. I was one of three students selected for admission in my program that year. It’s funny, in retrospect, to think of one program as being harder to get into than another. ↩

-

In the sense that most of the department was housed in one absurdly long hallway. ↩

posted September 22 2019

shutter bugged

The latest iPhone is all about the camera. The lenses are a marvel, and the future of photography is machine learning. With the iPhone 11, there are now several orders of magnitude more computational power behind your vacation snaps of Disney than there were in the systems that put man on the moon.

And it is all useless to me.

It’s the UI, of course. They made a small change to the camera’s behavior. The Camera app gives you two ways to take a picture: press the software shutter button, or press the hardware Volume Up key on the side of the phone. Before the iPhone 11, holding down either of these buttons would put the camera in Burst Mode, allowing it to capture a rapid succession of still images. As of the iPhone 11, holding down these buttons causes the camera to transition to video capture mode.

Which means I will never be able to take a good picture on an iPhone 11.

I have mild cerebral palsy, and with it, mild intentional tremor. My hands are fine at rest, but will often shake when I’m doing things with them—putting the key in my front door, carefully aligning a ruler, and my favorite, carrying a brimming cup of scalding coffee to my desk. The tremor isn’t bad and usually isn’t a big deal, though I suppose it depends on how full the coffee cup is.

My tremor is most annoying, believe it or not, when I pull out my phone to take a picture. Taking a picture requires not just holding the camera steady, but holding it steady while actively pressing a button. This inevitably causes my hand to shake slightly, which inevitably produces a blurred photo. Burst Mode was the answer to my prayers. It allowed me to press the shutter button, get my little shake over with, and capture some nice crisp photos from mid-burst.

Not so with the iPhone 11, which interprets the held shutter button as a command to begin shooting video. There is a fallback gesture that initiates Burst Mode on these phones, but it’s unintuitive, hard to discover, and most importantly, does not seem very tremor-friendly. So despite the fact that I am still using an iPhone 8, and these new cameras seem very tempting, I can’t possibly upgrade to an iPhone 11. It doesn’t matter how advanced the computational photography is if I can’t hold the thing steady.

Then again, my tremor is mild, and I can occasionally summon a Zen-like calm that makes this a nonissue. By the time I get there, though, the spotted egret that I had wanted to capture mid-flight will have long since died. Really, would it have killed Apple to make this a user-configurable option?

Update July 5, 2020

Per Apple’s preview of iOS 14, this behavior has been fixed! From the docs:

A new option allows you to capture burst photos by pressing the Volume Up button, and QuickTake video can be captured on supported devices using the Volume Down button.

I’m thinking my four year-old iPhone 7 is due for an upgrade. The new cameras are really something.

posted November 4 2018

Autumn at Mount Auburn Cemetery

If I have a Happy Place™, it takes the form of a forest at the height of autumn. I like to think of autumn as the time of year when all the trees stop pretending and show us who they really are. Last week I had the pleasure of overdosing on autumnal splendor with my friend, Paul. Together we went on a long walk through Cambridge’s Mount Auburn Cemetery. Dedicated in 1831, Mount Auburn was the United States’s first “garden cemetery”—meant to be a place of beauty and contemplation as much as it was (and still is) a burial site.

We couldn’t have asked for a more beautiful autumn day at the height of foliage season, and it resulted in some pretty amazing photos. Facebook’s compression algorithms hit the tiny details in these particular photos really hard. So I took it upon myself to build a little gallery on my own humble site. Click any of the thumbnails for a bigger view, or hit the download button for the even larger originals.

posted April 1 2018

algorithmic art: random circle packing

The algorithm above is a variant of one that currently runs on my front page. The heart of it is a circle packing algorithm, which you can find at my GitHub. All the code is there, so I won’t bother to explain every line of it here. There are, however, a few interesting bits I’d like to highlight.

Circle Packing

Circle packing is a well-established topic in mathematics. The central question is: how best can you cram non-overlapping circles into some available space? There are lots of variations on the idea, and lots of ways to implement solutions. My version works by proposing random locations for circles of smaller and smaller sizes, keeping only the circles that do not overlap with any circles already on the canvas. In pseudo-code, the simplest method looks like this:

let existing_circles = [ ]

let radius = 100

while (radius >= 0.5) {

trying 1000 times {

let new_circle = circle(random_x, random_y, radius)

if (new_circle does not overlap existing_circles) {

add new_circle to existing_circles

restart trying

}

}

// If we made it through all 1,000 tries without placing a circle, shrink the radius by 1%.

// The program stops if the radius drops below 0.5 pixels.

radius = radius * 0.99

}

The above is pseudo-code, and it hides a lot of complexity. In particular, line 7—new_circle does not overlap existing_circles—glosses over the most interesting part of the algorithm. What, exactly, is the most efficient way of checking to see if a new circle does not overlap any of the ones that have already been placed?

Notice that existing_circles is just a list, and it stores circles in the order in which they were created. Every time a new circle is proposed, its position is compared to the first circle in the list, then the next, and so on, up until a comparison fails or the list ends. On the one hand, the list structure has an implicit optimization: larger circles were created first and will be checked first, so areas on the canvas with the highest potential for overlap get checked early. On the other hand, if our maximum circle size is smaller, this advantage disappears. And in either case, successfully placing a new circle means that every existing circle needs to be checked, every time. The more circles we’ve placed, the more will need to be checked. This is like looking up the definition of the word packing by opening up the dictionary to the first entry and dutifully reading every word from aardvark to pace.

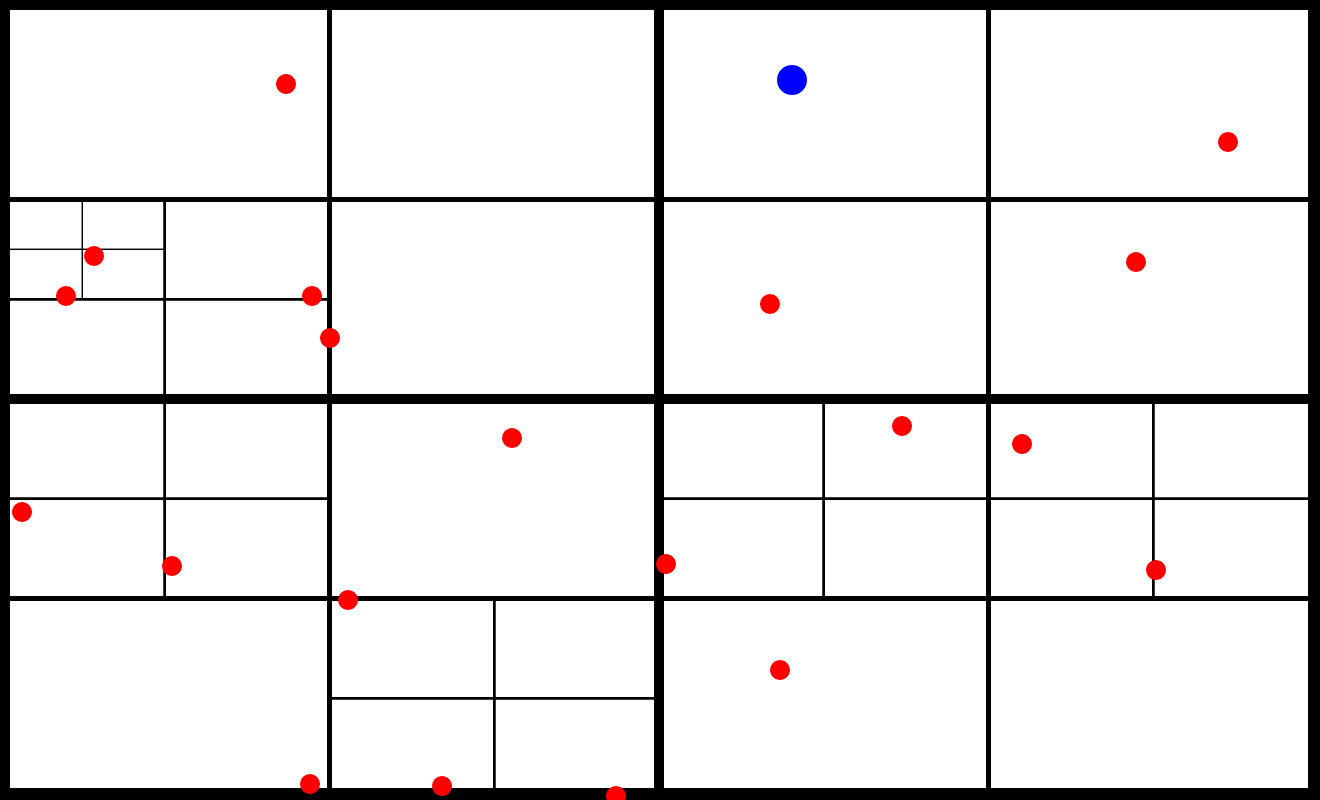

For the purposes of my website, that’s not acceptable—I need something that fills the canvas pretty quickly.1 What we really need is a data structure that organizes circles by their position, at least vaguely. That would make it easy to restrict our comparison only to circles that are near to the proposed new circle. Enter quadtrees.

Quadtrees

Of course, if you were looking for a specific word in a dictionary, you wouldn’t read it through from the start. Searching for packing, you’d probably grab a big chunk of the pages and flip open to somewhere near marzipan. Knowing that p comes after m, you flip forward by another chunk, landing at quintessence. Having gone too far, you browse backwards, perhaps more carefully, until you arrive at packing. This method of searching the dictionary is, roughly, a binary search. Rather than starting at the beginning of the data, you jump straight to the middle. If that’s not the object you’re looking for, go halfway to the beginning or end, depending on whether you’ve overshot or undershot. Continue taking these ever-smaller half-steps until you’ve narrowed your search to the thing you’re looking for.

This method of searching is a little less efficient for words near the beginning of the dictionary, but much more efficient, on average, across all possible entries.2

A quadtree generalizes this idea to 2D space, like our canvas. The quadtree works by dividing the canvas into four quadrants. Every time we propose a new circle, the quadtree checks to see where the circle landed. If the circle lands in an empty cell, great, make a note of that. If the circle lands in a cell that already has a circle, the cell splits into four smaller cells, repeating until each cell once again holds one circle. The image above shows a quadtree storing twenty circles, with a twenty-first proposed circle in blue. When checking for possible overlaps, we can quickly determine that three of the four biggest quadrants don’t overlap the proposed circle and one does—four quick checks and we’ve eliminated 75% of the canvas, no need to check the seventeen circles contained within. The proposed circle does overlap the upper-right quadrant, so its sub-quads will need to be checked—four more checks. After about eight comparisons, as opposed to twenty, we’ve determined that the proposed circle is valid. As more circles are added and the grid becomes finer, the number of checks becomes roughly proportional to the potential area of overlap.3

There are many fine implementations of quadtrees, and I certainly don’t claim that mine is the best, but it was very satisfying to learn it myself.4

Into the Quadforest

I’m glossing over one other thing. We don’t just want to compare the circle positions, but rather, their areas. Using a single quadtree, we have to assume that the potential search area is a square with side lengths equal to the diameter of the proposed new circle, plus the maximum circle diameter stored in the tree. In practice, as the circles get smaller, we’ll be checking a much bigger area, and many more circles, than is necessary. The solution is to use multiple quadtrees, the first of which stores and searches against the largest circles, the next smaller ones, and so on. In this way, we can ensure that larger circles are checked first, restoring the advantage from the naive method.

All these optimizations really do make a difference. When the maximum circle size is large (say 200 pixels in diameter), the quadforest search is about three times faster than the naive list search. When the maximum circle size is smaller (say 20 pixels in diameter), the quadforest is about thirty times faster. Code that pits the two methods against each other in a race is available in the repo.

Happy Accidents

Having gotten the algorithm working nicely, I wanted some visual interest, or as the kids say, “art”. My first thought was to have the circles pop into existence with an animation. I’m not clearing the canvas on each new frame, which saves me from having to redraw every circle for every frame. And because I was testing my quadtree, the drawing commands were set to draw unfilled, outline shapes. So when I specified a five-frame “pop” animation, what I ended up with was five concentric rings for each circle—the ghosts of the pop animation. These appear thin and delicate for the larger circles, but thicker and almost solid for the smaller ones. The unexpected patterning produces a variety of texture and brightness that I really enjoy.

And there you have it. Efficient random circle packing, done fast, live, and pretty. The code for this algorithm is available on Github.

-

If I were doing this in Processing proper, it wouldn’t really matter. A lightly optimized version of this basic algorithm can pack a large sketch almost completely in a few seconds. But this is P5, which means Javascript in a web browser. More optimizations are necessary. ↩

-

Assuming aardvark is the first entry in a 128 word dictionary, you can get to it in 7 steps using the binary search method, as opposed to 1 step using the start-to-finish naive method. If zebra is the last entry in the dictionary, it also requires 7 steps with binary search, as opposed to 128 with the naive method. ↩

-

More or less. As the canvas fills up, it’ll get harder to find new valid locations, and a very large, very dense, or very lopsided tree would introduce some inefficiencies. Wikipedia, as always, has more. ↩

-

The code for this sketch is mostly the quadtree implementation, with a few specialized functions for handling the circle stuff. Maybe I should retitle this post. ↩

posted February 10 2018

compound inequalities in R

Suppose you’re writing some code and you want to check to see if a variable, say x, is between two other numbers. The standard way to do this comparison in most languages, including R, would be something like:

x >= 10 & x <= 20

Not too shabby, all things considered. Of course, the repetition of the variable name is unfortunate, especially if you’re checking a.very.long.variable.name. You also have to flip either the second comparison operator or the order of its arguments, which can be a little confusing to read. Utility functions like dplyr::between give you the ability to write:

between(x, 10, 20)

That’s more convenient, but between assumes that the comparison should be inclusive on both sides; it implements >= and <=, but not > or <. We could write our own version of between to allow flags for whether each side of the comparison should be inclusive, but at that point the syntax gets more cumbersome than the thing it’s meant to replace.

What we really want is the ability to write the comparison as a compound inequality, which you might remember from grade school. They look like this:

10 < x <= 20

This succinctly expresses that x should be greater than 10 and less than or equal to 20. Expressing exclusive or inclusive comparisons on either side is as easy as changing the operators, and you type the compared variables and values only once.

It turns out that it’s possible to implement custom compound inequality operators in R, and it’s not hard. The main hurdle is that the custom operator needs to do one of two things: either store the result of a new comparison but return the original data (to be passed along to the second comparison), or return the result of a comparison that already has some stored results attached. Since R allows us to set arbitrary attributes on data primitives, we can easily meet these requirements.

'%<<%' <- function(lhs, rhs) {

if (is.null(attr(lhs, 'compound-inequality-partial'))) {

out <- rhs

attr(out, 'compound-inequality-partial') <- lhs < rhs

} else {

out <- lhs < rhs & attr(lhs, 'compound-inequality-partial')

}

return(out)

}

That’s all we need to implement compound “less-than” comparisons (we’ll also need similar definitions for <<=, >>, and >>=, but more on that in a minute). I’m using %<<% instead of just %<% because the complementary %>% operator would conflict with the widely used pipe operator. The doubled symbol also neatly reinforces that this operator is meant to evaluate two comparisons.

Whenever the custom %<<% is encountered, it checks to see if the argument on the lefthand side has an attribute called compound-inequality-partial. If it doesn’t, the result of the comparison is attached to the original data as an attribute with that name, and the modified data are returned. If the attribute name exists, the function checks to see whether both the comparison stored in compound-inequality-partial and the second comparison are true. That’s all there is to it.

Implementing this functionality for the remaining three comparisons is pretty repetitive, so let’s instead write a generalized comparison function. To do this, we’ll take advantage of the fact that in R, 1 + 2 can also be invoked as '+'(1, 2) or do.call('+', list(1, 2)). Here’s our generalized helper:

compound.inequality <- function(lhs, rhs, comparison) {

if (is.null(attr(lhs, 'compound-inequality-partial'))) {

out <- rhs

attr(out, 'compound-inequality-partial') <- do.call(comparison, list(lhs, rhs))

} else {

out <- do.call(comparison, list(lhs, rhs)) & attr(lhs, 'compound-inequality-partial')

}

return(out)

}

And then the definitions for all four operators become:

'%<<%' <- function(lhs, rhs) {

return(compound.inequality(lhs, rhs, '<'))

}

'%<<=%' <- function(lhs, rhs) {

return(compound.inequality(lhs, rhs, '<='))

}

'%>>%' <- function(lhs, rhs) {

return(compound.inequality(lhs, rhs, '>'))

}

'%>>=%' <- function(lhs, rhs) {

return(compound.inequality(lhs, rhs, '>='))

}

The comparison 2 %<<% 4 %<<% 6 is true. If you only wrote the first comparison, you’d get back 4, with TRUE attached as an attribute. Now it’s easy, and very readable, to specify compound “between” comparisons that are inclusive or exclusive on either side.

I’m not sure that I’d actually use this in “real” scripts, since these types of comparisons are relatively rare for me. But it was a fun problem to think about, and useful enough to share. This surprisingly simple solution demonstrates the flexibility of the R language. Code for this project is also available on Github.

posted September 7 2015

the pleasures and pitfalls of data visualization with maps

I’ve been thinking more about data visualization, if the previous post wasn’t enough of an indication. I recently stumbled upon an interesting post by Sean Lorenz at the Domino Data Lab blog on how to use R’s ggmap package to put stylish map tiles into your visualizations.

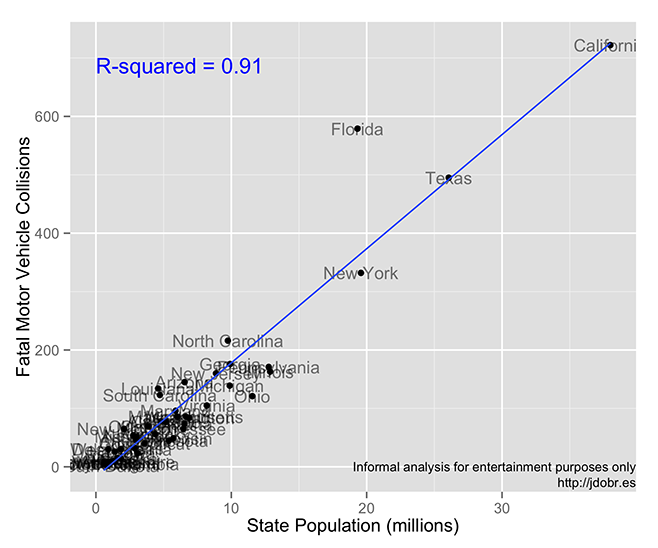

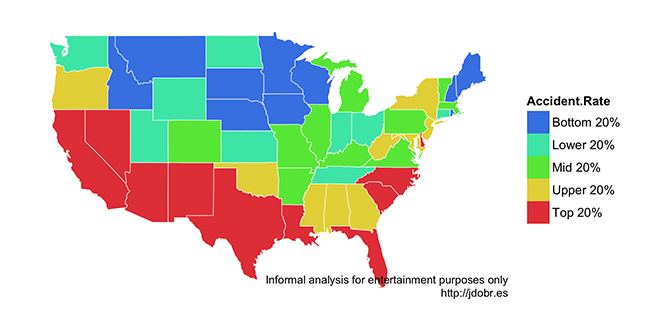

In the midst of his engaging and accessible tour of the package, Lorenz offers the following visualization of fatal motor vehicle collisons by state (or at any rate, a version very similar to the one I have here). Click to embiggen:

Lorenz says that this is a nice visualization of “the biggest offenders: California, Florida, Texas and New York.” But something about that list of states in that particular order seemed somehow familiar, and caught my attention.

Disclaimer

Before I continue, I’d like to state that the following is an informal analysis. It is intended for entertainment and educational purposes only, and is not scientifically rigorous, exhaustive, or in any way conclusive.

Data and R code for this project are available in my Github repository.

What Were We Talking About?

So, back to that map. The one where California, Florida, Texas, and New York were the “biggest offenders”. This struck me as suspicious, as California, Florida, Texas, and New York are coincidentally the most populous states in the country (in that order). A close reading of Lorenz’s post shows that he is, indeed, working with the raw number of collisions per state, and is not normalizing by the states’ populations. That’s important because, as you can see, there’s a very strong relationship between the number of people in a state and the number of accidents:

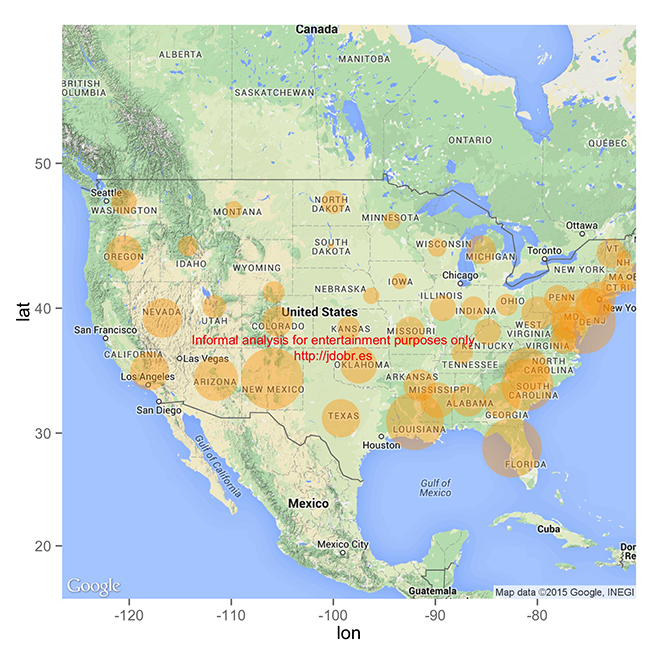

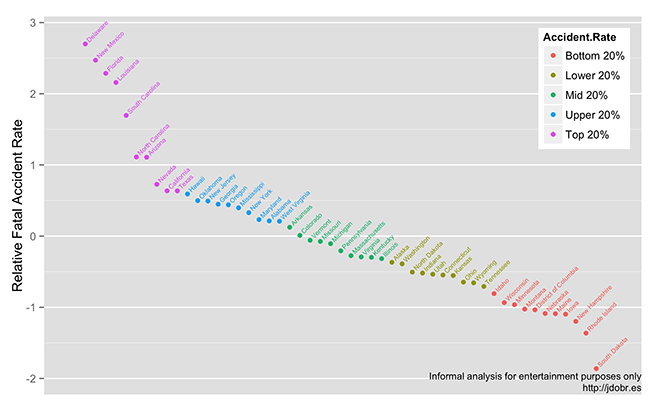

With a relationship that strong (even removing the Big Four outliers, R2 = 0.80), a plot of raw motor vehicle accidents is essentially just a map of population. Dividing the number of collisions per state by that state’s population yields a very different map:

As you can see, many of the bubbles are now roughly the same size. Florida still stands out from the crowd, and there’s a state somewhere in the Northeast with a very high accident rate, though it’s hard to tell which one, exactly. This map is no longer useful as a visualization, because it’s no longer clarifying the relationships between the data points. This is the main problem with bubble maps: they often visualize data that do not actually have a meaningful spatial relationship, and they do so in a way that would be hard to examine even without the superfluous geography.

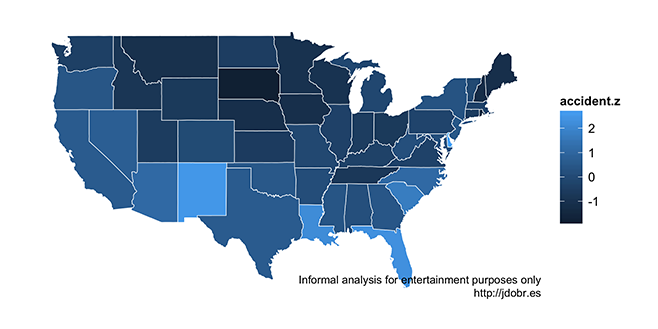

So how else might we visualize this information? Well, how about a chloropleth map, with each state simply colored by accident rate:

The brighter the state, the higher the rate.1 Here, it’s more obvious that Delaware, New Mexico, Louisiana, and Florida have high accident rates, but which has the highest? And what about that vast undifferentiated middle? Maybe the problem is that all those subtle shades of blue are hard to tell apart. Maybe we should simplify the coloring and bin the data into quantiles?

Colorful, and simpler, but not necessarily more useful. From this map, you’d get the impression that Florida, Delaware, Texas, California, and many other states all have comparable accident rates, which isn’t necessarily true. How about we ditch our commitment to maps and just plot the data points?

Ah, clarity at last. Here I’m still coloring the points by quantile so that you can see how much of the data’s variability was hidden in the previous map. Now it’s immediately clear that Delaware, New Mexico, Florida, Louisiana, and South Carolina all have unusually high accident rates (arguably, so do North Carolina and Arizona). Beyond that, almost every other state clusters within one standard deviation of the national average, with South Dakota having a notably low accident rate.

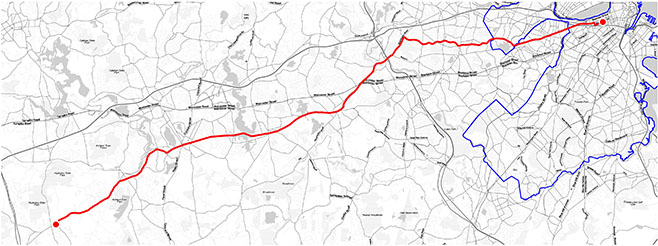

Of course, Lorenz’s point wasn’t really about the accident data, it was about how nifty maps can be. Having just spent the last few paragraphs demonstrating the ways in which maps can fool us, you might get the impression that I’m down on maps. I’m really not. Maps can be beautiful and informative, but by definition, they need to show us data with some spatial relevance. Take, for instance, this map of the Boston marathon.

The lovely tiles are pulled from Stamen Maps, courtesy of the ggmap package, and I really can’t emphasize enough how much work ggmap is doing for you here.2 From there, it was pretty easy to overlay some custom data. The red line traces the path of the Boston Marathon, and the blue line shows Boston’s city limits. Curiously, very little of the Boston Marathon actually happens in Boston. Starting way out west in Hopkinton, the marathon doesn’t touch Boston proper until it briefly treads through parts of Brighton. Then the route passes through Brookline (a separate township from Boston, and quite ardent about it), before re-entering the city limits near Boston’s Fenway neighborhood, just a couple of miles from the finish line.

-

Here I’m converting the accident rate (essentially, accidents per person) to a z-score. Z-scores have a variety of useful properties, one of which is that for any normally distributed set of observations (as the accident rates are, more or less), 99% of the data should fall between values of -3 and 3. This is more useful than the teensy percentage values, which are hard to interpret as a meaningful unit. Put another way, the simple percentage values tell me about the accident rate’s relationship to its state (not very useful), while the z-score tells me about the accident rates relative to each other (much more useful). ↩

-

Really. Adding in that clean outline of Boston’s city limits was a nightmare. You have no idea how difficult it was to a) find good shape data, b) read that data into R correctly, c) project the data into latitude/longitude coordinates, and d) plot it neatly. That ggmap can put so much beautifully formatted data into a plot so quickly is a real marvel. ↩